Reads text file and parses lines into key, val pairs. Everything up to the first tab character is sent as key to the Mapper and the remainder of the line is sent as value to the mapper.

In M/R system,

- HDFS block size is 64 MB

- Input format is FileInputFormat

- We have 3 files of size 64K, 65Mb and 127Mb

then how many input splits will be made by Hadoop framework?

Hadoop will make 5 splits as follows

- 1 split for 64K files

- 2 splits for 65Mb files

- 2 splits for 127Mb file

What is the purpose of RecordReader in Hadoop?

The InputSplit has defined a slice of work, but does not describe how to access it. The RecordReader class actually loads the data from its source and converts it into (key, value) pairs suitable for reading by the Mapper. The RecordReader instance is defined by the InputFormat

After the Map phase finishes, the hadoop framework does "Partitioning, Shuffle and sort". Explain what happens in this phase?

- Partitioning

Partitioning is the process of determining which reducer instance will receive which intermediate keys and values. Each mapper must determine for all of its output (key, value) pairs which reducer will receive them. It is necessary that for any key, regardless of which mapper instance generated it, the destination partition is the same

- Shuffle

After the first map tasks have completed, the nodes may still be performing several more map tasks each. But they also begin exchanging the intermediate outputs from the map tasks to where they are required by the reducers. This process of moving map outputs to the reducers is known as shuffling.

- Sort

Each reduce task is responsible for reducing the values associated with several intermediate keys. The set of intermediate keys on a single node is automatically sorted by Hadoop before they are presented to the Reducer

If no custom partitioner is defined in the hadoop then how is data partitioned before its sent to the reducer?

The default partitioner computes a hash value for the key and assigns the partition based on this result

What is a Combiner?

The Combiner is a "mini-reduce" process which operates only on data generated by a mapper. The Combiner will receive as input all data emitted by the Mapper instances on a given node. The output from the Combiner is then sent to the Reducers, instead of the output from the Mappers.

What is job tracker?

Job Tracker is the service within Hadoop that runs Map Reduce jobs on the cluster

What are some typical functions of Job Tracker?

The following are some typical tasks of Job Tracker

- Accepts jobs from clients

- It talks to the NameNode to determine the location of the data

- It locates TaskTracker nodes with available slots at or near the data

- It submits the work to the chosen Task Tracker nodes and monitors progress of each task by receiving heartbeat signals from Task tracker

What is task tracker?

Task Tracker is a node in the cluster that accepts tasks like Map, Reduce and Shuffle operations - from a JobTracker

Whats the relationship between Jobs and Tasks in Hadoop?

One job is broken down into one or many tasks in Hadoop.

Suppose Hadoop spawned 100 tasks for a job and one of the task failed. What will hadoop do ?

It will restart the task again on some other task tracker and only if the task fails more than 4 (default setting and can be changed) times will it kill the job

Hadoop achieves parallelism by dividing the tasks across many nodes, it is possible for a few slow nodes to rate-limit the rest of the program and slow down the program. What mechanism Hadoop provides to combat this ?

Speculative Execution

How does speculative execution works in Hadoop ?

Job tracker makes different task trackers process same input. When tasks complete, they announce this fact to the Job Tracker. Whichever copy of a task finishes first becomes the definitive copy. If other copies were executing speculatively, Hadoop tells the Task Trackers to abandon the tasks and discard their outputs. The Reducers then receive their inputs from whichever Mapper completed successfully, first.

Using command line in Linux, how will you

- see all jobs running in the hadoop cluster

- kill a job

- hadoop job -list

- hadoop job -kill jobid

What is Hadoop Streaming ?

Streaming is a generic API that allows programs written in virtually any language to be used as Hadoop Mapper and Reducer implementations

What is the characteristic of streaming API that makes it flexible run map reduce jobs in languages like perl, ruby, awk etc. ?

Hadoop Streaming allows to use arbitrary programs for the Mapper and Reducer phases of a Map Reduce job by having both Mappers and Reducers receive their input on stdin and emit output (key, value) pairs on stdout.

Whats is Distributed Cache in Hadoop ?

Distributed Cache is a facility provided by the Map/Reduce framework to cache files (text, archives, jars and so on) needed by applications during execution of the job. The framework will copy the necessary files to the slave node before any tasks for the job are executed on that node.

What is the benifit of Distributed cache, why can we just have the file in HDFS and have the application read it ?

This is because distributed cache is much faster. It copies the file to all trackers at the start of the job. Now if the task tracker runs 10 or 100 mappers or reducer, it will use the same copy of distributed cache. On the other hand, if you put code in file to read it from HDFS in the MR job then every mapper will try to access it from HDFS hence if a task tracker run 100 map jobs then it will try to read this file 100 times from HDFS. Also HDFS is not very efficient when used like this.

What mechanism does Hadoop framework provides to synchronize changes made in Distribution Cache during runtime of the application ?

This is a trick questions. There is no such mechanism. Distributed Cache by design is read only during the time of Job execution

Have you ever used Counters in Hadoop. Give us an example scenario ?

Anybody who claims to have worked on a Hadoop project is expected to use counters

Is it possible to provide multiple input to Hadoop? If yes then how can you give multiple directories as input to the Hadoop job ?

Yes, The input format class provides methods to add multiple directories as input to a Hadoop job

Is it possible to have Hadoop job output in multiple directories. If yes then how ?

Yes, by using Multiple Outputs class

What will a hadoop job do if you try to run it with an output directory that is already present? Will it

- overwrite it

- warn you and continue

- throw an exception and exit

The hadoop job will throw an exception and exit.

How can you set an arbitrary number of mappers to be created for a job in Hadoop ?

This is a trick question. You cannot set it

How can you set an arbitary number of reducers to be created for a job in Hadoop ?

You can either do it progamatically by using method setNumReduceTasksin the JobConfclass or set it up as a configuration setting

How will you write a custom partitioner for a Hadoop job ?

To have hadoop use a custom partitioner you will have to do minimum the following three

- Create a new class that extends Partitioner class

- Override method getPartition

- In the wrapper that runs the Map Reducer, either

- add the custom partitioner to the job programtically using method setPartitionerClass or

- add the custom partitioner to the job as a config file (if your wrapper reads from config file or oozie)

How did you debug your Hadoop code ?

There can be several ways of doing this but most common ways are

- By using counters

- The web interface provided by Hadoop framework

Did you ever built a production process in Hadoop ? If yes then what was the process when your hadoop job fails due to any reason?

Its an open ended question but most candidates, if they have written a production job, should talk about some type of alert mechanisn like email is sent or there monitoring system sends an alert. Since Hadoop works on unstructured data, its very important to have a good alerting system for errors since unexpected data can very easily break the job.

Did you ever ran into a lop sided job that resulted in out of memory error, if yes then how did you handled it ?

This is an open ended question but a candidate who claims to be an intermediate developer and has worked on large data set (10-20GB min) should have run into this problem. There can be many ways to handle this problem but most common way is to alter your algorithm and break down the job into more map reduce phase or use a combiner if possible.

What is HDFS?

HDFS, the Hadoop Distributed File System, is a distributed file system designed to hold very large amounts of data (terabytes or even petabytes), and provide high-throughput access to this information. Files are stored in a redundant fashion across multiple machines to ensure their durability to failure and high availability to very parallel applications

What does the statement "HDFS is block structured file system" means?

It means that in HDFS individual files are broken into blocks of a fixed size. These blocks are stored across a cluster of one or more machines with data storage capacity

What does the term "Replication factor" mean?

Replication factor is the number of times a file needs to be replicated in HDFS

What is the default replication factor in HDFS?

3

What is the default block size of an HDFS block?

64Mb

What is the benefit of having such big block size (when compared to block size of linux file system like ext)?

It allows HDFS to decrease the amount of metadata storage required per file (the list of blocks per file will be smaller as the size of individual blocks increases). Furthermore, it allows for fast streaming reads of data, by keeping large amounts of data sequentially laid out on the disk

Why is it recommended to have few very large files instead of a lot of small files in HDFS?

This is because the Name node contains the meta data of each and every file in HDFS and more files means more metadata and since namenode loads all the metadata in memory for speed hence having a lot of files may make the metadata information big enough to exceed the size of the memory on the Name node

True/false question. What is the lowest granularity at which you can apply replication factor in HDFS

- You can choose replication factor per directory

- You can choose replication factor per file in a directory

- You can choose replication factor per block of a file

- True

- True

- False

What is a datanode in HDFS?

Individual machines in the HDFS cluster that hold blocks of data are called datanodes

What is a Namenode in HDFS?

The Namenode stores all the metadata for the file system

What alternate way does HDFS provides to recover data in case a Namenode, without backup, fails and cannot be recovered?

There is no way. If Namenode dies and there is no backup then there is no way to recover data

Describe how a HDFS client will read a file in HDFS, like will it talk to data node or namenode ... how will data flow etc?

To open a file, a client contacts the Name Node and retrieves a list of locations for the blocks that comprise the file. These locations identify the Data Nodes which hold each block. Clients then read file data directly from the Data Node servers, possibly in parallel. The Name Node is not directly involved in this bulk data transfer, keeping its overhead to a minimum.

Using linux command line. how will you

- List the the number of files in a HDFS directory

- Create a directory in HDFS

- Copy file from your local directory to HDFS

hadoop fs -ls

hadoop fs -mkdir

hadoop fs -put localfile hdfsfile

Advantages of Hadoop?

- Bringing compute and storage together on commodity hardware: The result is blazing speed at low cost.

- Price performance: The Hadoop big data technology provides significant cost savings (think a factor of approximately 10) with significant performance improvements (again, think factor of 10). Your mileage may vary. If the existing technology can be so dramatically trounced, it is worth examining if Hadoop can complement or replace aspects of your current architecture.

- Linear Scalability: Every parallel technology makes claims about scale up.Hadoop has genuine scalability since the latest release is expanding the limit on the number of nodes to beyond 4,000.

- Full access to unstructured data: A highly scalable data store with a good parallel programming model, MapReduce, has been a challenge for the industry for some time. Hadoop programming model does not solve all problems, but it is a strong solution for many tasks.

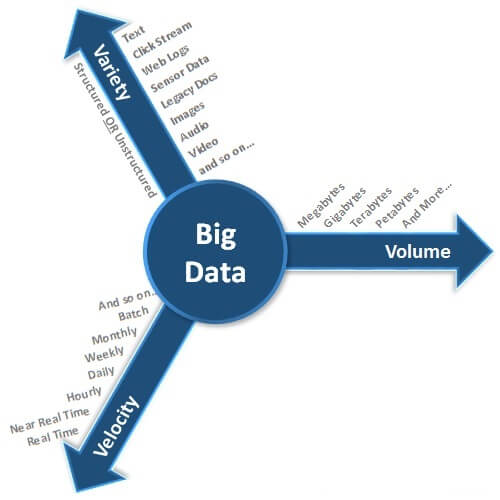

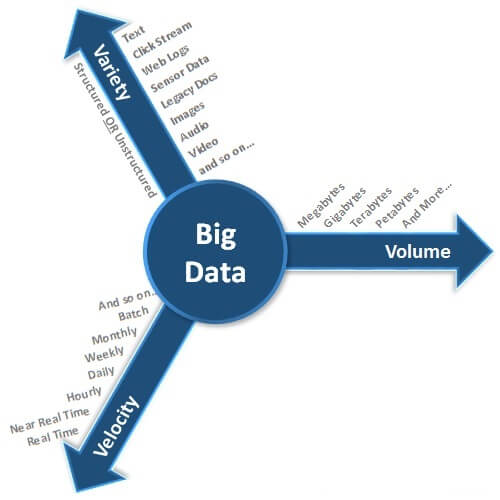

Definition of Big data?

According to Gartner, Big data can be defined as high volume, velocity and variety information requiring innovative and cost effective forms of information processing for enhanced decision making.

How Big data differs from database ?

Datasets which are beyond the ability of the database to store, analyze and manage can be defined as Big. The technology extracts required information from large volume whereas the storage area is limited for a database.

Who are all using Hadoop? Give some examples?

- A9.com

- Amazon

- Adobe

- AOL

- Baidu

- Cooliris

- Facebook

- NSF-Google

- IBM

- LinkedIn

- Ning

- PARC

- Rackspace

- StumbleUpon

- Twitter

- Yahoo!

Pig for Hadoop - Give some points?

Pig is Data-flow oriented language for analyzing large data sets.

It is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs. The salient property of Pig programs is that their structure is amenable to substantial parallelization, which in turns enables them to handle very large data sets.

At the present time, Pig infrastructure layer consists of a compiler that produces sequences of Map-Reduce programs, for which large-scale parallel implementations already exist (e.g., the Hadoop subproject). Pig language layer currently consists of a textual language called Pig Latin, which has the following key properties:

Ease of programming.

It is trivial to achieve parallel execution of simple, "embarrassingly parallel" data analysis tasks. Complex tasks comprised of multiple interrelated data transformations are explicitly encoded as data flow sequences, making them easy to write, understand, and maintain.

Optimization opportunities.

The way in which tasks are encoded permits the system to optimize their execution automatically, allowing the user to focus on semantics rather than efficiency.

Extensibility.

Users can create their own functions to do special-purpose processing.

Features of Pig:

– data transformation functions

– datatypes include sets, associative arrays, tuples

– high-level language for marshalling data

- developed at yahoo!

Hive for Hadoop - Give some points?

Hive is a data warehouse system for Hadoop that facilitates easy data summarization, ad-hoc queries, and the analysis of large datasets stored in Hadoop compatible file systems. Hive provides a mechanism to project structure onto this data and query the data using a SQL-like language called HiveQL. At the same time this language also allows traditional map/reduce programmers to plug in their custom mappers and reducers when it is inconvenient or inefficient to express this logic in HiveQL.

Keypoints:

- SQL-based data warehousing application

– features similar to Pig

– more strictly SQL-type

- Supports SELECT, JOIN, GROUP BY,etc

- Analyzing very large data sets

– log processing, text mining, document indexing

Map Reduce in Hadoop?

Map reduce :

it is a framework for processing in parallel across huge datasets usning large no. of computers referred to cluster, it involves two processes namely Map and reduce.

Map Process:

In this process input is taken by the master node,which divides it into smaller tasks and distribute them to the workers nodes. The workers nodes process these sub tasks and pass them back to the master node.

Reduce Process :

In this the master node combines all the answers provided by the worker nodes to get the results of the original task. The main advantage of Map reduce is that the map and reduce are performed in distributed mode. Since each operation is independent, so each map can be performed in parallel and hence reducing the net computing time.

What is a heartbeat in HDFS?

A heartbeat is a signal indicating that it is alive. A data node sends heartbeat to Name node and task tracker will send its heart beat to job tracker. If the Name node or job tracker does not receive heart beat then they will decide that there is some problem in data node or task tracker is unable to perform the assigned task.

What is a metadata?

Metadata is the information about the data stored in data nodes such as location of the file, size of the file and so on.

Is Namenode also a commodity?

No. Namenode can never be a commodity hardware because the entire HDFS rely on it.

It is the single point of failure in HDFS. Namenode has to be a high-availability machine.

Can Hadoop be compared to NOSQL database like Cassandra?

Though NOSQL is the closet technology that can be compared to Hadoop, it has its own pros and cons. There is no DFS in NOSQL. Hadoop is not a database. It’s a filesystem (HDFS) and distributed programming framework (MapReduce).

What is Key value pair in HDFS?

Key value pair is the intermediate data generated by maps and sent to reduces for generating the final output.

What is the difference between MapReduce engine and HDFS cluster?

HDFS cluster is the name given to the whole configuration of master and slaves where data is stored. Map Reduce Engine is the programming module which is used to retrieve and analyze data.

What is a rack?

Rack is a storage area with all the datanodes put together. These datanodes can be physically located at different places. Rack is a physical collection of datanodes which are stored at a single location. There can be multiple racks in a single location.

How indexing is done in HDFS?

Hadoop has its own way of indexing. Depending upon the block size, once the data is stored, HDFS will keep on storing the last part of the data which will say where the next part of the data will be. In fact, this is the base of HDFS.

History of Hadoop?

Hadoop was created by Doug Cutting, the creator of Apache Lucene, the widely used text search library. Hadoop has its origins in Apache Nutch, an open source web search engine, itself a part of the Lucene project.

The name Hadoop is not an acronym; it’s a made-up name. The project’s creator, Doug Cutting, explains how the name came about:

The name my kid gave a stuffed yellow elephant. Short, relatively easy to spell and pronounce, meaningless, and not used elsewhere: those are my naming criteria.

Subprojects and “contrib” modules in Hadoop also tend to have names that are unrelated to their function, often with an elephant or other animal theme (“Pig,” for example). Smaller components are given more descriptive (and therefore more mundane) names. This is a good principle, as it means you can generally work out what something does from its name. For example, the jobtracker keeps track of MapReduce jobs.

What is meant by Volunteer Computing?

Volunteer computing projects work by breaking the problem they are trying to solve into chunks called work units, which are sent to computers around the world to be analyzed.

SETI@home is the most well-known of many volunteer computing projects.

How Hadoop differs from SETI (Volunteer computing)?

Although SETI (Search for Extra-Terrestrial Intelligence) may be superficially similar to MapReduce (breaking a problem into independent pieces to be worked on in parallel), there are some significant differences. The SETI@home problem is very CPU-intensive, which makes it suitable for running on hundreds of thousands of computers across the world. Since the time to transfer the work unit is dwarfed by the time to run the computation on it. Volunteers are donating CPU cycles, not bandwidth.

MapReduce is designed to run jobs that last minutes or hours on trusted, dedicated hardware running in a single data center with very high aggregate bandwidth interconnects. By contrast, SETI@home runs a perpetual computation on untrusted machines on the Internet with highly variable connection speeds and no data locality.

Compare RDBMS and MapReduce?

Data size:

RDBMS - Gigabytes

MapReduce - Petabytes

Access:

RDBMS - Interactive and batch

MapReduce - Batch

Updates:

RDBMS - Read and write many times

MapReduce - Write once, read many times

Structure:

RDBMS - Static schema

MapReduce - Dynamic schema

Integrity:

RDBMS - High

MapReduce - Low

Scaling:

RDBMS - Nonlinear

MapReduce - Linear

What is HBase?

A distributed, column-oriented database. HBase uses HDFS for its underlying storage, and supports both batch-style computations using MapReduce and point queries (random reads).

What is ZooKeeper?

A distributed, highly available coordination service. ZooKeeper provides primitives such as distributed locks that can be used for building distributed applications.

What is Chukwa?

A distributed data collection and analysis system. Chukwa runs collectors that store data in HDFS, and it uses MapReduce to produce reports. (At the time of this writing, Chukwa had only recently graduated from a “contrib” module in Core to its own subproject.)

What is Avro?

A data serialization system for efficient, cross-language RPC, and persistent data storage. (At the time of this writing, Avro had been created only as a new subproject, and no other Hadoop subprojects were using it yet.)

core subproject in Hadoop - What is it?

A set of components and interfaces for distributed filesystems and general I/O (serialization, Java RPC, persistent data structures).

What are all Hadoop subprojects?

Pig, Chukwa, Hive, HBase, MapReduce, HDFS, ZooKeeper, Core, Avro

What is a split?

Hadoop divides the input to a MapReduce job into fixed-size pieces called input splits, or just splits. Hadoop creates one map task for each split, which runs the userdefined map function for each record in the split.

Having many splits means the time taken to process each split is small compared to the time to process the whole input. So if we are processing the splits in parallel, the processing is better load-balanced.

On the other hand, if splits are too small, then the overhead of managing the splits and of map task creation begins to dominate the total job execution time. For most jobs, a good split size tends to be the size of a HDFS block, 64 MB by default, although this can be changed for the cluster

Map tasks write their output to local disk, not to HDFS. Why is this?

Map output is intermediate output: it’s processed by reduce tasks to produce the final output, and once the job is complete the map output can be thrown away. So storing it in HDFS, with replication, would be overkill. If the node running the map task fails before the map output has been consumed by the reduce task, then Hadoop will automatically rerun the map task on another node to recreate the map output.

MapReduce data flow with a single reduce task- Explain?

The input to a single reduce task is normally the output from all mappers.

The sorted map outputs have to be transferred across the network to the node where the reduce task is running, where they are merged and then passed to the user-defined reduce function. The output of the reduce is normally stored in HDFS for reliability.

For each HDFS block of the reduce output, the first replica is stored on the local node, with other replicas being stored on off-rack nodes.

MapReduce data flow with multiple reduce tasks- Explain?

When there are multiple reducers, the map tasks partition their output, each creating one partition for each reduce task. There can be many keys (and their associated values) in each partition, but the records for every key are all in a single partition. The partitioning can be controlled by a user-defined partitioning function, but normally the default partitioner.

MapReduce data flow with no reduce tasks- Explain?

It’s also possible to have zero reduce tasks. This can be appropriate when you don’t need the shuffle since the processing can be carried out entirely in parallel.

In this case, the only off-node data transfer is used when the map tasks write to HDFS

What is a block in HDFS?

Filesystems deal with data in blocks, which are an integral multiple of the disk block size. Filesystem blocks are typically a few kilobytes in size, while disk blocks are normally 512 bytes.

Why is a Block in HDFS So Large?

HDFS blocks are large compared to disk blocks, and the reason is to minimize the cost of seeks. By making a block large enough, the time to transfer the data from the disk can be made to be significantly larger than the time to seek to the start of the block. Thus the time to transfer a large file made of multiple blocks operates at the disk transfer rate.

File permissions in HDFS?

HDFS has a permissions model for files and directories.

There are three types of permission: the read permission (r), the write permission (w) and the execute permission (x). The read permission is required to read files or list the contents of a directory. The write permission is required to write a file, or for a directory, to create or delete files or directories in it. The execute permission is ignored for a file since you can’t execute a file on HDFS.

What is Thrift in HDFS?

The Thrift API in the “thriftfs” contrib module exposes Hadoop filesystems as an Apache Thrift service, making it easy for any language that has Thrift bindings to interact with a Hadoop filesystem, such as HDFS.

To use the Thrift API, run a Java server that exposes the Thrift service, and acts as a proxy to the Hadoop filesystem. Your application accesses the Thrift service, which is typically running on the same machine as your application.

How Hadoop interacts with C?

Hadoop provides a C library called libhdfs that mirrors the Java FileSystem interface.

It works using the Java Native Interface (JNI) to call a Java filesystem client.

The C API is very similar to the Java one, but it typically lags the Java one, so newer features may not be supported. You can find the generated documentation for the C API in the libhdfs/docs/api directory of the Hadoop distribution.

What is FUSE in HDFS Hadoop?

Filesystem in Userspace (FUSE) allows filesystems that are implemented in user space to be integrated as a Unix filesystem. Hadoop’s Fuse-DFS contrib module allows any Hadoop filesystem (but typically HDFS) to be mounted as a standard filesystem. You can then use Unix utilities (such as ls and cat) to interact with the filesystem.

Fuse-DFS is implemented in C using libhdfs as the interface to HDFS. Documentation for compiling and running Fuse-DFS is located in the src/contrib/fuse-dfs directory of the Hadoop distribution.

Explain WebDAV in Hadoop?

WebDAV is a set of extensions to HTTP to support editing and updating files. WebDAV shares can be mounted as filesystems on most operating systems, so by exposing HDFS (or other Hadoop filesystems) over WebDAV, it’s possible to access HDFS as a standard filesystem.

What is Sqoop in Hadoop?

It is a tool design to transfer the data between Relational database management system(RDBMS) and Hadoop HDFS.

Thus, we can sqoop the data from RDBMS like mySql or Oracle into HDFS of Hadoop as well as exporting data from HDFS file to RDBMS.

Sqoop will read the table row-by-row and the import process is performed in Parallel. Thus, the output may be in multiple files.

Example:

sqoop INTO "directory";

(SELECT * FROM database.table WHERE condition;)